technical: Lost in Translation 2 - ASCII and EBCDIC on z/OS

In the first

article in this series, we introduced EBCDIC code pages, and how they work

with z/OS. But z/OS became more complicated when UNIX Systems Services (USS)

introduced ASCII into the mix. Web-enablement and XML add UTF-8 and other Unicode

specifications as well. So how do EBCDIC, ASCII and Unicode work together?

ASCII Code Pages

If you are a mainframe veteran like me, you work in EBCDIC. You use a TN3270

emulator to work with TSO/E and ISPF, you use datasets, and every program is

EBCDIC. Or at least that's the way it used to be.

UNIX Systems Services (USS) has changed all that. Let's take a simple example.

Providing it has been setup by the Systems Programmer, you can now use any Telnet

client such as PuTTY to access z/OS using telnet, SSH or rlogin. From here,

you get a standard UNIX shell that will feel like home to anyone who has used

UNIX on any platform. telnet, SSH and rlogin are ASCII sessions. So z/OS (or

more specifically, TCP/IP) will convert everything going to or from that telnet

client between ASCII and EBCDIC.

Like EBCDIC, expanded ASCII has different code pages for different languages

and regions, though not nearly as many. Most English speakers will use the ISO-8859-1

ASCII. If you're from Norway, you may prefer ISO-8859-6, and Russians will probably

go for ISO-8859-5. In UNIX, the ASCII character set you use is part of the locale,

which also includes currency symbol and date formats preferred. The locale is

set using the setenv command to update the LANG or LC_* environment variables.

You then set the Language on your Telnet client to the same, and you're away.

Here is how it's done on PuTTY.

From the USS shell on z/OS, this is exactly the same (it is a POSIX compliant

shell after all). So to change the locale to France, we use the USS setenv command:

Setenv LANG fr_FR

The first two characters are the language code specified in the ISO 639-1 standard

and the second two the country code from ISO 3166-1.

ASCII in Files

Converting to and from ASCII on z/OS consumes resources. If you're only working

with ASCII data, it would be a good idea to store the data in ASCII, and avoid

the overhead of always converting between EBCDIC and ASCII.

The good news is that this is no problem. ASCII is also a Single Byte Character

Set (SBCS), so all the z/OS instructions and functions work just as well for

ASCII as they do for EBCDIC. Database managers generally just store bytes. So

if you don't need them to display the characters in a readable form on a screen,

you can easily store ASCII in z/OS datasets, USS files and z/OS databases.

The problem is displaying that information. Using ISPF to edit a dataset with

ASCII data will show gobbldy-gook - unreadable characters. ISPF browse has similar

problems.

With traditional z/OS datasets, there's nothing you can do. However z/OS USS

files have a tag that can specify the character set that used. For example,

look at the ls listing of the USS file here:

>ls -T file1.txt

t IBM-1047 T=on file1.txt

You can see that the code page used is IBM-1047 - the default EBCDIC. The t

to the left of the output indicates that the file holds text, and T=on indicates

that the file holds uniformly encoded text data. However here is another file:

>ls -T file2.txt

t ISO8859-1 T=on file2.txt

The character set is ISO8859-1: extended ASCII for English Speakers. This tag

can be set using the USS ctag command. It can also be set when mounting a USS

file system, setting the default tag for all files in that file system.

This tag can be used to determine how the file will be viewed. From z/OS 1.9,

this means that if using ISPF edit or browse to access a dataset, ASCII characters

will automatically be displayed if this tag is set to CCSID 819 (ISO8859-1).

The TSO/E OBROWSE and OEDIT commands, ISPF option 3.17, or the ISHELL interface

all use ISPF edit and browse.

If storing ASCII in traditional z/OS datasets, ISPF BROWSE and EDIT will not

automatically convert from ASCII. Nor will it convert for any other character

set other than ISO8859-1. However you can still view the ASCII data using the

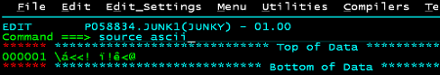

SOURCE ASCII command. Here is an example of how this command works.

ASCII in Databases

Some database systems also store the code page. For example, have a look at the

following output from the SAS PROC _CONTENTS procedure. This shows the definitions

of a SAS table. You can see that it is encoded in EBCDIC 1142 (Denmark/Norway):

THE CONTENTS PROCEDURE

DATA SET NAME DS1.CICSTXN OBSERVATIONS 81023

MEMBER TYPE DATA VARIABLES 114

ENGINE BASE INDEXES 0

CREATED 28. APRIL 2011 TORSDAG 03:38:16 OBSERVATION LENGTH 840

LAST MODIFIED 28. APRIL 2011 TORSDAG 03:38:16 DELETED OBSERVATION

PROTECTION COMPRESSED NO

DATA SET TYPE SORTED NO

LABEL

DATA REPRESENTATION MVS_32

ENCODING EBCDIC1142 DENMARK/NORWAY (EBCDIC)

DB2 also plays this kind of ball. Every DB2 table can have an ENCODING_SCHEME

variable assigned in SYSIBM.TABLES which overrides the default CCSID. This value

can also be overridden in the SQL Descriptor Area for SQL statements, or in

the stored procedure definition for stored procedures. You can also override

specify CCSID when binding an application, or in the DB2 DECLARE or CAST statements.

Or in other words, if you define tables and applications correctly, DB2 will

do all the translation for you.

The Problem with Unicode

Unicode has one big advantage over EBCDIC and ASCII: there are no code pages.

Every character is represented in the same table. And the Standards people have

made sure that Unicode has enough room for a lot more characters - even the

Star Trek Klingon language characters get a mention.

But of course this would be too simple. There are actually a few different

Unicodes out there:

- UTF-8: Multi-Byte character set, though most characters are just one byte.

The basic ASCII characters (a-z, A-Z, 0-9) are the same.

- UTF-16: Multi-byte characters set, though most characters are two bytes.

- UTF-32: Each character is four bytes.

Whichever Unicode you use, they all have the same drawback: one character does

not necessarily use one byte. z/OS string operations generally assume that one

byte is one character.

Most high level languages have some sort of Unicode support, including C, COBOL

and PL/1. However you need to tell these programs

that you're using Unicode in compiler options or string manipulation options.

z/OS also has instructions for converting between Unicode, UTF-8, UTF-16 and

UTF-32. By Unicode, IBM means Unicode Basic Latin: the first 255 characters

of Unicode - which fit into one byte.

A problem with anything using Unicode on z/OS is that it can be expensive in

terms of CPU use. To help out, IBM has introduced some new Assembler instructions

oriented towards Unicode. Many of the latest high level language compilers use these when

working with Unicode instructions - making these programs much faster. If you

have a program that uses Unicode and hasn't been recompiled for a few years,

consider recompiling it. You may see some performance improvements.

What This Means

z/OS is still EBCDIC and always will be. However IBM has realised that z/OS

needs to talk to the outside world, and so other character encoding schemes

like ASCII and Unicode need to be supported - and are.

z/OS USS files have an attribute to tell you the encoding scheme, and some

databases systems like SAS and DB2 also give you an attribute to set. Otherwise,

you need to know yourself how the strings are encoded.

This second article has covered how EBCDIC, ASCII and Unicode work together

with z/OS. In the

final article in this series of three. I'll look at converting

between them.

David Stephens

|