technical: Is WLM Discretionary Really a Good Idea?

Let me tell you a story about one of my clients. I recommended that jobs be moved into WLM service classes with discretionary goals. Unfortunately, this affected their online transactions in the middle of the night. But how could this happen, and does this mean that discretionary is bad?

A Quick Review of WLM Discretionary

z/OS administrators configure z/OS Workload Manager (WLM) with service classes and assign attributes to them. The attribute in question covers periods with discretionary goals: they get CPU when no one else wants it.

z/OS administrators can set classification rules to assign workloads to these service classes based on factors like jobname, userid, and transaction name. In our example, we used the jobname to assign the jobs to a service class that looked something like this:

-- Period -- ------------------- Goal -------------------

Action # Duration Imp. Description

__ 1 _________ _ Discretionary

These batch jobs now only used CPU when no one else wanted it. Well, that's not quite right, but I'll get to that in a second.

Why Discretionary

So, why did I (perhaps, foolishly) recommend discretionary? Our client had experienced performance problems from a lack of CPU. There were occasional processing spikes that could not be predicted. We implemented a few things to protect them from these spikes, including assigning jobs to our discretionary service class.

The idea is simple: put jobs that aren't time-critical as discretionary; jobs like reporting, housekeeping, and SMF processing jobs. Now, when these spikes occurred, the discretionary jobs would step back for a time and allow time-sensitive workloads (like online transactions) to use all the available CPU.

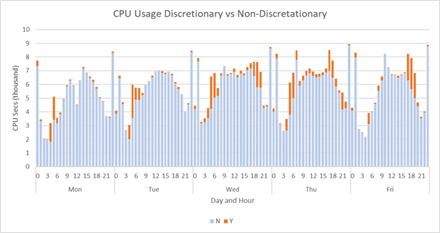

I've also recommended discretionary to other clients for CPU protection, as well as to identify jobs that can wait for CPU. Discretionary workloads can be viewed on CPU consumption graphs from SMF type 72 records, like this:

Sites can see workloads that are not time-sensitive, and better set CPU capacity caps as explained in this article. This can reduce software licensing and other costs.

For me, this is the power of discretionary. It's not for unimportant jobs; it is for jobs that are not time sensitive. An example of such jobs are IMS log accumulation jobs. When IMS logs fill, our client automatically archived and cleared them for re-use. These IMS archive jobs are time-critical: you don't want to run out of IMS logs. But before you say 'ahah!', these aren't the jobs we're talking about. Our client regularly performed an 'accumulation' of these archived IMS logs every night to make IMS database recovery faster. These accumulation jobs were not time sensitive, and it was these jobs we had in mind.

How Discretionary Can Affect Production

In this case, the IMS accumulation jobs ran during an overnight batch peak period. As these jobs were discretionary, they waited for more important jobs to execute. What we found was that these jobs would start, pause from a lack of CPU, and our IMS transaction issues would occasionally (but not often) occur.

These discretionary jobs would get an ENQ for the IMS RECON dataset: essential for IMS Database Recovery and Control (DBRC). In rare cases, it would hold this ENQ while pausing for other (non-discretionary) batch. During this pause, IMS would fill a log, attempt to switch to another, and fail to obtain the RECON ENQ.

If you read IMS documentation, you will see that IMS RECON ENQ issues are a thing. IMS has several recommendations, some as extreme as placing RECON datasets in their own catalogs that reside on the same disk volume.

Protections for Discretionary

The IMS change accumulation jobs were changed back to their old (non-discretionary) service classes. However, two z/OS features could have prevented this.

The first is something called WLM Discretionary Goal Management. This may cap the CPU usage of certain workloads that exceed their performance goals, allowing discretionary batch jobs to get some of the CPU. It is enabled by default but can be disabled in the WLM configuration. In our case, it was enabled, but it didn't help our problem. This was because higher importance workloads were not exceeding their performance goals.

The second is the IEAOPTxx PARMLIB parameter ERV. This setting tells z/OS how long a low-importance (including discretionary) job can have an elevated priority if it is causing ENQ contention. With an elevated priority, the job can get CPU to finish and release the ENQ: addressing our ENQ problem. The IBM default is 500 service units; our client had it set to 750. This equated to around 0.02 CPU seconds. IBM recommend that this be increased to 50,000 service units. This would likely have avoided our problem.

The Death of Discretionary?

Such a tale could stop many from using discretionary workloads, and I've seen this in a few sites. It makes sense to be wary of discretionary workloads that may use important ENQs. However, discretionary is still a valuable tool. And don't take my word for it. IBM's Washington System Centre (WSC) provides a WLM 'starter' policy that includes discretionary. Watson and Walker also provide a sample WLM policy with discretionary workloads.

One argument is to use the lowest importance 5 rather than discretionary. And this seems logical; importance 5 is low importance, but may still get CPU if it is not meeting its goals. However, an importance 5 job not meeting its goals can take CPU away from an importance 1 workload exceeding its goals. If WLM configurations aren't perfect (and they sometimes aren't), this can cause a problem.

Most of my clients use discretionary: the CPU usage graph shown earlier is from such a client. Reporting, backups, user batch, and more are excellent discretionary candidates that will improve a z/OS system's protection from processing spikes, and potentially allow them to set more aggressive z/OS system caps.

Enabling WLM Discretionary Goal Management and setting ERV to IBM's recommendation will provide protection from ENQ and similar issues where discretionary workloads 'lock up' resources that higher priority workloads need.

David Stephens

|