management: Capping: It's Really Not That Bad

A few months ago, I was at one site and mentioned capping. The comments I got back were things like "we can't kill our application's response times." And this is a typical response: people relate capping with end-user pain. But it doesn't have to be like this.

Capping Strategies

When capping, there are three major strategies you can choose from:

- Defensive: capping is implemented at current peak CPU consumption. The objective of this strategy is not to reduce CPU consumption, but to protect against runaway, or increased CPU consumption.

- Conservative: workloads are capped so that CPU is reduced with little or no effect on user experiences or response times. This strategy concentrates on 'squashing' low importance work such as housekeeping batch or development compile jobs, while allowing high priority workloads the CPU they need.

- Aggressive: workloads are capped to impact user's experiences during peak periods. That's right, this strategy plans to affect user service times during peak periods. It aims to educate users to use more CPU outside of peak periods. So, if a developer knows that their compiles will take a long time on the first day of each month, they will hopefully change their work practices to avoid this day for their non-critical work.

To some of my clients, simply introducing these three options is a surprise. Yes, you can actually do capping without affecting any service levels. I'll say that again: capping can be done with no user impact. You can think of capping as a sliding scale: the more CPU savings, the higher the impact.

Let's look a bit closer at these three strategies.

Defensive Capping

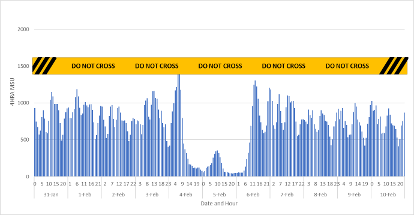

Consider the following graph of CPU usage for a typical site. The peak four-hour rolling average (4HRA) MSUs are far less than the available capacity. The good news is that if the incoming workloads increase, there's spare CPU to cater for that demand. The bad news is that anything can use this spare capacity. z/OS is designed so that if CPU is available, anyone can use it. This could be your core online IMS system. Or a developer submitting an ISPF SuperC batch job to search through some very large files. Or a test program in an infinite loop. Anything can use it.

So, there's a good chance that the software bills for this site are fluctuating from month to month with a changing peak CPU usage.

What we can do is to implement a cap at, say 1500 MSUs. This is a little more than our current peak usage, but will prevent any 'rogue' workloads suddenly increasing the CPU usage by too much. 1500 is a little more than our peak, so we're allowing enough spare capacity for a small workload increase.

With this strategy, the peak CPU usage is monitored. If incoming workloads are increasing, you'll want to increase this cap to suit. Similarly, decreasing or more efficient processing may provide an opportunity to decrease this defensive cap without affecting users.

Conservative Capping

Defensive capping is unlikely to reduce your current costs: we're protecting against future cost blowouts. Conservative capping attempts to squash non-critical workloads to get some cost savings now. But how do you identify these 'non-critical' workloads?

The fact is that you already do this with z/OS WLM. You have service classes defined - some will get more CPU resources, some less. One of the settings in WLM is an importance level for each service class - from 1 (very important) to 5 (least important). Another lower level is assigned to workloads that only get CPU resources if no one else needs it - discretionary.

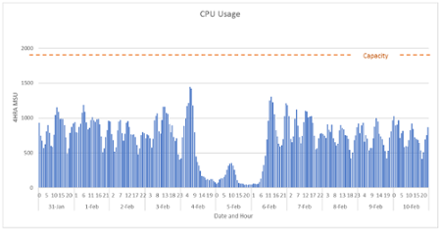

The following graph shows an example of the CPU usage broken up by importance level - the lowest importance levels are at the top (in black and grey). We've used an importance of 6 to represent discretionary; this makes it easier to graph. When I'm beginning to plan for capping, this is the first thing I look at. In this case, the lowest importance levels consume most of the CPU, though there's a small 'blip' where importance level 3 jumps up on 02-Feb.

From this type of graph, we can start to plan how much we can 'squash' the workloads. In this example, if WLM is setup right, we could probably set a limit at something like 550 MSUs without affecting higher importance workloads. Compare this with the current 930 MSU peak - a big difference.

Aggressive Capping

There's no doubt that aggressive capping is for the brave. But to the courageous go the spoils. So, this is where the maximum cost savings are to be had. Again, the type of graph shown above is a great place to start. So, if we're up for an aggressive cap, we may be interested in squashing more than the lowest importance levels. For example, we may decide that we're willing to affect importance level 3 workloads. If this were the case, we could possible cap our system at 200 MSUs, far less than our conservative 550 MSU value, and really big decrease from the current 930 MSU peak.

Choosing the Capping Value

In our above examples, we've chosen some possible capping values based on the above graphs. In reality, the best capping value is not that easy to find. Values that may appear to be suitable on paper can hurt service levels more than predicted. So, a measured approach to implementing capping needs to be used. We cover this more in our partner article.

Conclusion

When many people think of capping, they think of angry users and pain. And I've seen examples where this has been the reality. But it doesn't need to be this way. Capping can be implemented to protect against CPU usage increases, or to gain some benefits without affecting users. Those willing to penalise users for consuming CPU in peak periods will be interested in a more aggressive capping strategy for maximum savings.

David Stephens

|