LongEx Mainframe Quarterly - February 2020

Over the past 18 months, we've been working with a client and our partner, CPT Global, to implement Sysplex: increasing application resilience by implementing and using Sysplex features. But the client had been using Sysplex for years. In fact, it's difficult to start a z/OS system that isn't in a Sysplex. So, what did we actually do? Sysplex vs Sysplex FeaturesEveryone runs a Sysplex - even a single z/OS system (a Monoplex). This means that systems can share DASD, tape drives, ENQs, and more. They can also use coupling facility structures and XCF signalling. These are the 'out of the box' features.

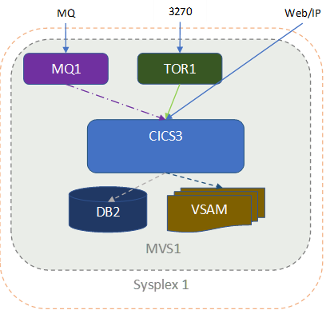

But these features don't really help application resilience. For that, we need Sysplex-based features supplied by transaction managers and database systems. For example, DB2 and IMS data sharing allow tasks on different z/OS systems to share database data. If DB2 or IMS/DB on one system fails, the other can still work. IMS common queues (CQS) do something similar for IMS Transaction Manager. IMS messages are shared by multiple IMS subsystems. If one goes down, the other can continue processing. CICS also offers features in this space like shared temporary storage queues. We may also need Sysplex-based features from middleware. For example, IBM MQ queue sharing allows tasks on different z/OS systems to share the same MQ queues, channels and other MQ resources. And then, there's the application issues. Using the Features: Active-ActiveLet's look at our client. The site had enabled DB2 data sharing, CPSM and VSAM RLS. We helped them enable MQ queue sharing, and worked with them to enable features like CICS shared temporary storage queues and VTAM generic resources. But this was just the beginning. One of our applications was a CICS/COBOL/MQ/VSAM application. At the beginning of our project, this application ran in a single CICS region. It looked something like this:

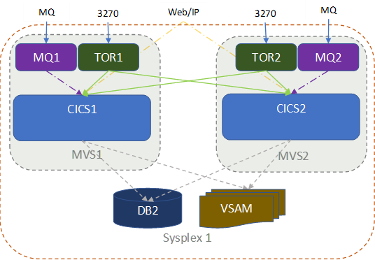

3270 traffic came in through a Terminal Owning Region (TOR). Web/IP traffic and MQ triggering went directly to our Application Owning Region (AOR). So, our application didn't need (or use) Sysplex features. But we wanted resilience: if one CICS, JES, TCP/IP, or even z/OS was unavailable, processing would continue on the other side. Or in other words, active-active. So, we wanted something like this:

To do that, we needed to do the work to actually use all these Sysplex features provided by z/OS, IMS, CICS, DB2 and IBM MQ. Let's look at what this meant. MQOur MQ queue manager may be connected to a queue sharing group (QSG). However, we still needed to convert MQ queues to be shared queues. In our case, we redefined the queues as shared. We also converted model queues to global model queues, and channels to shared channels. We also implemented some of the nice MQ features to improve resilience such as group units of recovery. We needed to change how transactions were triggered by incoming MQ messages. We implemented a queue owning region (QOR) for improved load balancing and resilience. All queues were modified to send trigger messages to these QORs. External MQ queue managers that connected in had to be modified to connect to the MQ queue sharing group, using a DVIPA address rather than one for a single z/OS system. VSAMOur files needed to be converted to VSAM RLS so they could be opened by multiple CICS regions at the same time. Or in other words, redefined the VSAM datasets so they have a storage class that points to coupling facility structures required for RLS (a lock and cache). Then, modify CICS file definitions to open the files in 'RLS mode.' Our clients also specified LOG(ALL) for their files: updates were recorded in a logstream for forward recovery. So, our CICS 'Log of Logs' had to be changed from a DASD-only logstream unique to each CICS region to a coupling facility-based logstream. We also tested that our forward recovery solution worked with VSAM RLS updated across multiple z/OS systems. CPSMWe needed to setup all the CPSM configurations for the CICS transactions: CICSPlex, scopes, transaction groups, workloads etc. We needed to select the right algorithm for CPSM to use for transaction routing: in our case, LNQUEUE. We also setup queue-owning and web-owning regions for better load balancing and resilience. These changes required updates to automation rules with new error messages for CPSM-related resources and errors. CICS FeaturesOur applications needed to use coupling facility data tables, named counters, shared temporary storage queues, and global ENQs. All these needed to be setup in the CICS regions. Again, we needed to update automation to manage new address spaces required for these, and handle error messages that may occur. We needed to work with applications about these features: when they should be used, how they can be used, and what is needed to use them. CICS AffinitiesWe now had multiple 'copies' of our application running in different CICS regions in different z/OS systems. So, we needed to look for affinities: resources used by applications that may be a problem in a Sysplex. For example, an application using an in-memory table needed changing so that the tables were synchronized among all the CICS regions. We also needed to look for other processing that could be a problem. For example, some programs 'hard-coded' CICS region names, z/OS system names and MQ queue manager names. Some used transaction classes for serialization, and others started CICS transactions that now may need to be routed to other CICS regions. Other IssuesAll our applications needed at some point to use the CPSM API to manage resources across a CICSPlex, or work with other CICS regions. This needed to be setup, security determined, and application groups educated on how to use it. And of course, there were a hundred other issues we found as the project moved along. ConclusionSysplex provides the potential for a lot of load balancing and improved resilience. However, it doesn't come out of the box. The infrastructure must first be setup: things like DB2 data sharing, MQ queue sharing and CICSPlex. Then, the applications must be modified to use these features. In our case, this wasn't a simple task. We dig deeper into some of the issues we found in other articles in this and the next edition of LongEx Mainframe Quarterly, as well as our Share presentation in Fort Worth in February 2020. |